数据分析和建模方面的大量编程工作都是用在数据准备上的:加载、清理、转换、重塑。是因为,多数时候存放在文件或数据库中的数据不能满足你的数据处理应用的要求。

7.1 合并数据集

pandas对象中的数据可以通过一些内置的方式进行合并:

pandas.merge可根据一个或多个键将不同的DataFrame中的行连接起来。我们的SQL或其他的关系型数据库就是实现数据库的连接操作

pandas.concat可以沿着一条轴将多个对象堆叠在一起。

实例方法combine_first可以将重复数据编接在一起,用一个对象中的值填充另一个对象中的缺失值。

7.1.1 数据库风格的DataFrame合并

数据集的合并(merge)或连接(join)运算是通过一个或多个键将行连接起来。这些运算是关系型数据库的核心。对数据应用的这些算法的主要以pandas的merge函数为切入点。

In [38]: import pandas as pd

In [39]: from pandas import DataFrame

In [40]: df1=DataFrame({'key':['b','b','a','c','a','a','b'],'data1':range(7)})

In [41]: df2=DataFrame({'key':['b','b','d'],'data2':range(3)})

In [42]: df1

Out[42]:

data1 key

0 0 b

1 1 b

2 2 a

3 3 c

4 4 a

5 5 a

6 6 b

In [43]: df2

Out[43]:

data2 key

0 0 b

1 1 b

2 2 d

这是一种多对一的合并。对这些对象调用merge即可得到:

In [44]: pd.merge(df1,df2)

Out[44]:

data1 key data2

0 0 b 0

1 0 b 1

2 1 b 0

3 1 b 1

4 6 b 0

5 6 b 1

上述的代码并没有指定需要哪个列进行连接。若未指定,merge就会将重叠列的列名当做键。可以显式的指定一下:

In [7]: pd.merge(df1,df2,on="key")

Out[7]:

data1 key data2

0 0 b 0

1 0 b 1

2 1 b 0

3 1 b 1

4 6 b 0

5 6 b 1

如果两个对象的列名不同,也可以分别进行指定:

In [8]: df3=DataFrame({'1key':['b','b','a','c','a','a','b'],'data1':range(7)})

In [9]: df4=DataFrame({'rkey':['a','b','d'],'data2':range(3)})

In [10]: pd.merge(df3,df4,left_on='1key',right_on='rkey')

Out[10]:

1key data1 data2 rkey

0 b 0 1 b

1 b 1 1 b

2 b 6 1 b

3 a 2 0 a

4 a 4 0 a

5 a 5 0 a

在上述的结果中,C和d以及与之相关的数据不见了,默认下,merge做的是"inner"的连接;

结果中的键是交集,其他方式还有“left”,“right”以及“outer”。外连接求取的是键的并集,组合了左连接和右连接的效果:

多对多的合并非常简单,甚至无需要额外的工作,如下所示:

In [17]: df1=DataFrame({'key':['b','b','a','c','a','b'],'data1':range(6)})

In [19]: df2=DataFrame({'key':['a','b','a','b','d'],'data2':range(5)})

In [20]: df1

Out[20]:

data1 key

0 0 b

1 1 b

2 2 a

3 3 c

4 4 a

5 5 b

In [21]: df2

Out[21]:

data2 key

0 0 a

1 1 b

2 2 a

3 3 b

4 4 d

In [22]: pd.merge(df1,df2,on='key',how='left')

Out[22]:

data1 key data2

0 0 b 1.0

1 0 b 3.0

2 1 b 1.0

3 1 b 3.0

4 2 a 0.0

5 2 a 2.0

6 3 c NaN

7 4 a 0.0

8 4 a 2.0

9 5 b 1.0

10 5 b 3.0

多对多连接产生的行就是笛卡体积,由于左边的DataFrame有3个“b”行,右边的有2个,最终结果就是6个“b”行。连接方式只影响出现结果中的键:

In [25]: pd.merge(df1,df2,how='inner')

Out[25]:

data1 key data2

0 0 b 1

1 0 b 3

2 1 b 1

3 1 b 3

4 5 b 1

5 5 b 3

6 2 a 0

7 2 a 2

8 4 a 0

9 4 a 2

要根据多个键盘进行合并,传入一个由列名组成的列表即可:

In [26]: left=DataFrame({'key1':['foo','foo','bar'],'key2':['one','two','one'],'lval':[1,2,3]})

In [27]: right=DataFrame({'key1':['foo','foo','bar','bar'],'key2':['one','one','one','two'],'rval':[4,5,5,7]})

In [28]: pd.merge(left,right,on=['key1','key2'],how='outer')

Out[28]:

key1 key2 lval rval

0 foo one 1.0 4.0

1 foo one 1.0 5.0

2 foo two 2.0 NaN

3 bar one 3.0 5.0

4 bar two NaN 7.0

最后的结果会出现哪些键取决于所选的合并方式。

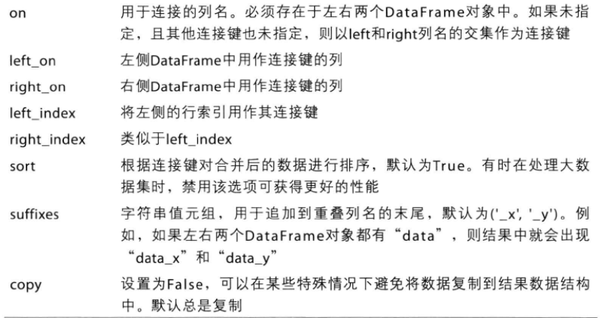

合并运算考虑的最后一个问题是对重复列名的处理。merge有一个实用的suffi选项,用于指定附加到左右两个DataFrame 对象的重叠列名的字符串上:

In [9]: pd.merge(left,right,on="key1")

Out[9]:

key1 key2_x lval key2_y rval

0 foo one 1 one 4

1 foo one 1 one 5

2 foo two 2 one 4

3 foo two 2 one 5

4 bar one 3 one 5

5 bar one 3 two 7

In [10]: pd.merge(left,right,on="key1",suffixes=('_left','_right'))

Out[10]:

key1 key2_left lval key2_right rval

0 foo one 1 one 4

1 foo one 1 one 5

2 foo two 2 one 4

3 foo two 2 one 5

4 bar one 3 one 5

5 bar one 3 two 7

7.2索引上的合并

DataFrame中的连接键有时候位于其索引中。这种情况下,可以传入left_index=True 或 right_index=True(或两个都传)以说明索引应该被用作连接键:

In [11]: left1=DataFrame({'key':['a','b','a','a','b','c'],'value':range(6)})

In [12]: right1=DataFrame({'group_val':[3.5,7]},index=['a','b'])

In [13]: left1

Out[13]:

key value

0 a 0

1 b 1

2 a 2

3 a 3

4 b 4

5 c 5

In [14]: right1

Out[14]:

group_val

a 3.5

b 7.0

In [15]: pd.merge(left1,right1,left_on='key',right_index=True)

Out[15]:

key value group_val

0 a 0 3.5

2 a 2 3.5

3 a 3 3.5

1 b 1 7.0

4 b 4 7.0

In [17]: pd.merge(left1,right1,left_on='key',right_index=True,how='outer')

Out[17]:

key value group_val

0 a 0 3.5

2 a 2 3.5

3 a 3 3.5

1 b 1 7.0

4 b 4 7.0

5 c 5 NaN

对于层次化索引的数据,事情稍微复杂:

In [33]: lefth=DataFrame({'key1':['Ohio','Ohio','Ohio','Nevada','Nevada'],'key2':[2000,2001,2002,2001,2002],'data':numpy.arange(5.)})

In [37]: righth=DataFrame(np.arange(12).reshape((6,2)),index=[['Nevada','Nevada','Ohio','Ohio','Ohio','Ohio'],[2001,2000,2000,2000,2001,2002]],columns=['event1','event2'])

In [38]: lefth

Out[38]:

data key1 key2

0 0.0 Ohio 2000

1 1.0 Ohio 2001

2 2.0 Ohio 2002

3 3.0 Nevada 2001

4 4.0 Nevada 2002

In [39]: righth

Out[39]:

event1 event2

Nevada 2001 0 1

2000 2 3

Ohio 2000 4 5

2000 6 7

2001 8 9

2002 10 11

上面这种情况,你必须以列表的形式指明用作合并键的多个列:

In [40]: pd.merge(lefth,righth,left_on=['key1','key2'],right_index=True)

Out[40]:

data key1 key2 event1 event2

0 0.0 Ohio 2000 4 5

0 0.0 Ohio 2000 6 7

1 1.0 Ohio 2001 8 9

2 2.0 Ohio 2002 10 11

3 3.0 Nevada 2001 0 1

In [41]: pd.merge(lefth,righth,left_on=['key1','key2'],right_index=True,how='outer')

Out[41]:

data key1 key2 event1 event2

0 0.0 Ohio 2000 4.0 5.0

0 0.0 Ohio 2000 6.0 7.0

1 1.0 Ohio 2001 8.0 9.0

2 2.0 Ohio 2002 10.0 11.0

3 3.0 Nevada 2001 0.0 1.0

4 4.0 Nevada 2002 NaN NaN

4 NaN Nevada 2000 2.0 3.0

同时使用合并双方的索引:

In [49]: left2=DataFrame([[1.,2.],[3.,4.],[5.,6.]],index=['a','b','e'],columns=['Ohio','Nevada'])

In [50]: right2=DataFrame([[7.,8.],[9.,10.],[11.,12.],[13.,14.]],index=['b','d','d','e'],columns=['Missouri','Alabama'])

In [51]: left2

Out[51]:

Ohio Nevada

a 1.0 2.0

b 3.0 4.0

e 5.0 6.0

In [52]: right2

Out[52]:

Missouri Alabama

b 7.0 8.0

d 9.0 10.0

d 11.0 12.0

e 13.0 14.0

In [53]: pd.merge(left2,right2,how='outer',left_index=True,right_index=True)

Out[53]:

Ohio Nevada Missouri Alabama

a 1.0 2.0 NaN NaN

b 3.0 4.0 7.0 8.0

d NaN NaN 9.0 10.0

d NaN NaN 11.0 12.0

e 5.0 6.0 13.0 14.0

DataFrame 还有一个join的方法,更为方便的实现按索引进行合并。还用于合并带有多个相同或相似索引的DataFrame对象,而不管他们之间的有没有重叠的列。

In [54]: left2.join(right2,how='outer')

Out[54]:

Ohio Nevada Missouri Alabama

a 1.0 2.0 NaN NaN

b 3.0 4.0 7.0 8.0

d NaN NaN 9.0 10.0

d NaN NaN 11.0 12.0

e 5.0 6.0 13.0 14.0

DataFrame的join方法是连接键上做的左连接,还支持参数DataFrame的索引跟调用者DataFrame的某个列之间的连接:

In [55]: left1.join(right1,on='key')

Out[55]:

key value group_val

0 a 0 3.5

1 b 1 7.0

2 a 2 3.5

3 a 3 3.5

4 b 4 7.0

5 c 5 NaN

对于简单的索引合并,还可以向join传入一组DataFrame :

In [56]: another=DataFrame([[7.,8.],[9.,10.],[11.,12.],[16.,17.]],index=['a','c','e','f'],columns=['New York','Oregon'])

In [58]: left2.join([right2,another])

Out[58]:

Ohio Nevada Missouri Alabama New York Oregon

a 1.0 2.0 NaN NaN 7.0 8.0

b 3.0 4.0 7.0 8.0 NaN NaN

c NaN NaN NaN NaN 9.0 10.0

d NaN NaN 9.0 10.0 NaN NaN

d NaN NaN 11.0 12.0 NaN NaN

e 5.0 6.0 13.0 14.0 11.0 12.0

f NaN NaN NaN NaN 16.0 17.0

In [59]: left2.join([right2,another],how='outer')

Out[59]:

Ohio Nevada Missouri Alabama New York Oregon

a 1.0 2.0 NaN NaN 7.0 8.0

b 3.0 4.0 7.0 8.0 NaN NaN

c NaN NaN NaN NaN 9.0 10.0

d NaN NaN 9.0 10.0 NaN NaN

d NaN NaN 11.0 12.0 NaN NaN

e 5.0 6.0 13.0 14.0 11.0 12.0

f NaN NaN NaN NaN 16.0 17.0

7.1.3轴向连接

另一种数据合并运算也被称作连接(connection)、绑定(binding)、或堆叠(stacking)

Numpy有一个用于合并原始Numpy数组的concatenation 函数:

In [11]: arr=np.arange(12).reshape((3,4))

In [12]: arr

Out[12]:

array([[ 0, 1, 2, 3],

[ 4, 5, 6, 7],

[ 8, 9, 10, 11]])

In [13]: np.concatenate([arr,arr],axis=1)

Out[13]:

array([[ 0, 1, 2, ..., 1, 2, 3],

[ 4, 5, 6, ..., 5, 6, 7],

[ 8, 9, 10, ..., 9, 10, 11]])

对于pandas对象(如Series和DataFrame),带有标签的轴能够进推广数组的连接

运算。

对pandas的concat提供的方式。

我们假设有三个没有重叠索引的Series :

In [16]: from pandas import DataFrame,Series

In [17]: s1=Series([0,1],index=['a','b'])

In [18]: s2=Series([2,3,4],index=['c','d','e'])

In [19]: s3=Series([5,6],index=['f','g'])

In [21]: s1

Out[21]:

a 0

b 1

dtype: int64

In [22]: s2

Out[22]:

c 2

d 3

e 4

dtype: int64

In [23]: s3

Out[23]:

f 5

g 6

dtype: int64

In [24]: pd.concat([s1,s2,s3])

Out[24]:

a 0

b 1

c 2

d 3

e 4

f 5

g 6

dtype: int64

In [25]: pd.concat([s1,s2,s3],axis=0)

Out[25]:

a 0

b 1

c 2

d 3

e 4

f 5

g 6

dtype: int64

In [26]: pd.concat([s1,s2,s3],axis=1)

Out[26]:

0 1 2

a 0.0 NaN NaN

b 1.0 NaN NaN

c NaN 2.0 NaN

d NaN 3.0 NaN

e NaN 4.0 NaN

f NaN NaN 5.0

g NaN NaN 6.0

默认状态,不特殊指定,concat的axis=0,会生成一个新的Series ;

如果axis=1,结果会变为DataFrame ,而且我们再传入join='inner'就可以得到他们的交集

In [27]: [s1*5,s3]

Out[27]:

[a 0

b 5

dtype: int64, f 5

g 6

dtype: int64]

In [28]: s1*5

Out[28]:

a 0

b 5

dtype: int64

In [29]: s4=pd.concat([s1*5,s3])

In [30]: pd.concat([s1,s4],axis=1)

Out[30]:

0 1

a 0.0 0

b 1.0 5

f NaN 5

g NaN 6

In [31]: pd.concat([s1,s4],axis=1,join='inner')

Out[31]:

0 1

a 0 0

b 1 5

可以通过join_axes 指定要在其他轴上使用的索引:

In [32]: pd.concat([s1,s4],axis=1,join_axes=[['a','c','b','e']])

Out[32]:

0 1

a 0.0 0.0

c NaN NaN

b 1.0 5.0

e NaN NaN

上面的代码有个问题,参与连接的片段在结果中无法区分。

使用keys参数可以在连接轴上创建一个层次化索引。

In [33]: pd.concat([s1,s2,s3],axis=1)

Out[33]:

0 1 2

a 0.0 NaN NaN

b 1.0 NaN NaN

c NaN 2.0 NaN

d NaN 3.0 NaN

e NaN 4.0 NaN

f NaN NaN 5.0

g NaN NaN 6.0

In [34]: pd.concat([s1,s2,s3],keys=['one','two'])

Out[34]:

one a 0

b 1

two c 2

d 3

e 4

dtype: int64

In [35]: pd.concat([s1,s2,s3],keys=['one','two','three'])

Out[35]:

one a 0

b 1

two c 2

d 3

e 4

three f 5

g 6

dtype: int64

In [36]: result=pd.concat([s1,s2,s3],keys=['one','two','three'])

In [37]: result.unstack()

Out[37]:

a b c d e f g

one 0.0 1.0 NaN NaN NaN NaN NaN

two NaN NaN 2.0 3.0 4.0 NaN NaN

three NaN NaN NaN NaN NaN 5.0 6.0

如果我们沿着axis=1对Series进行合并,那么keys就会成为DataFrame的例头:

In [38]: pd.concat([s1,s2,s3],axis=1,keys=['one','two','three'])

Out[38]:

one two three

a 0.0 NaN NaN

b 1.0 NaN NaN

c NaN 2.0 NaN

d NaN 3.0 NaN

e NaN 4.0 NaN

f NaN NaN 5.0

g NaN NaN 6.0

In [39]: pd.concat([s1,s2,s3],axis=1)

Out[39]:

0 1 2

a 0.0 NaN NaN

b 1.0 NaN NaN

c NaN 2.0 NaN

d NaN 3.0 NaN

e NaN 4.0 NaN

f NaN NaN 5.0

g NaN NaN 6.0

这样对于DataFrame也是相同的:

In [40]: df1=DataFrame(np.arange(6).reshape(3,2),index=['a','b','c'],columns=['one','two'])

In [41]: df2=DataFrame(5+np.arange(4).reshape(2,2),index=['a','c'],columns=['three','four'])

In [43]: df1

Out[43]:

one two

a 0 1

b 2 3

c 4 5

In [44]: df2

Out[44]:

three four

a 5 6

c 7 8

In [45]: pd.concat([df1,df2],axis=1,keys=['level1','level2'])

Out[45]:

level1 level2

one two three four

a 0 1 5.0 6.0

b 2 3 NaN NaN

c 4 5 7.0 8.0

7.1.4合并重叠数据

还有一种是数据组合是不能用简单的合并(merge)或连接(concatenation)运算来处理。

比如,可能索引全部或部分重叠的两个数据集。

我们使用numpy的where函数,用于表达一种矢量化的if-else:

In [13]: a=Series([np.nan,2.5,np.nan,3.5,4.5,np.nan],index=['f','e','d','c','b','a'])

In [14]: b=Series(numpy.arange(len(a),dtype=np.float64),index=['f','e','d','c','b','a'])

In [15]: b[-1]=np.nan

In [16]: a

Out[16]:

f NaN

e 2.5

d NaN

c 3.5

b 4.5

a NaN

dtype: float64

In [17]: b

Out[17]:

f 0.0

e 1.0

d 2.0

c 3.0

b 4.0

a NaN

dtype: float64

In [31]: (pd.isnull(a),b,a)

Out[31]:

(f True

e False

d True

c False

b False

a True

dtype: bool, f 0.0

e 1.0

d 2.0

c 3.0

b 4.0

a NaN

dtype: float64, f NaN

e 2.5

d NaN

c 3.5

b 4.5

a NaN

dtype: float64)

In [33]: np.where(pd.isnull(a),b,a)

Out[33]: array([ 0. , 2.5, 2. , 3.5, 4.5, nan])

Series还有一个combine_first 方法,实现的也是这种功能:

In [34]: b[:-2].combine_first(a[2:])

Out[34]:

a NaN

b 4.5

c 3.0

d 2.0

e 1.0

f 0.0

对于DataFrame,combine_first自然也会在列上做同样的事情,因此可以将其看做:

用参数对象中的数据为调用者对象的缺失数据“打补丁”:

In [36]: df1=DataFrame({'a':[1.,np.nan,5.,np.nan],'b':[np.nan,2.,np.nan,6.],'c':range(2,18,4)})

In [37]: df2=DataFrame({'a':[5.,4.,np.nan,3.,7.],'b':[np.nan,3.,4.,6.,8.]})

In [38]: df1

Out[38]:

a b c

0 1.0 NaN 2

1 NaN 2.0 6

2 5.0 NaN 10

3 NaN 6.0 14

In [39]: df2

Out[39]:

a b

0 5.0 NaN

1 4.0 3.0

2 NaN 4.0

3 3.0 6.0

4 7.0 8.0

In [40]: df1.combine_first(df2)

Out[40]:

a b c

0 1.0 NaN 2.0

1 4.0 2.0 6.0

2 5.0 4.0 10.0

3 3.0 6.0 14.0

4 7.0 8.0 NaN

7.2 重塑和轴向旋转

许多用于重新排列表格型数据的基础运算,这些函数也称作重塑(reshape)或轴向旋转(pivot)运算。

7.2.1 重塑层次化索引

层次化索引为DataFrame数据的重排任务提供了很好的一致性方式。

主要功能:

stack:将数据的列“旋转”为行

unstack:将数据的行“旋转”为列

下面给出一个例子,其中的行列索引均为字符串:

In [41]: data=DataFrame(np.arange(6).reshape((2,3)),index=pd.Index(['Ohio','Colorado'],name='state'),columns=pd.Index(['one','two','three'],name='number'))

In [42]: data

Out[42]:

number one two three

state

Ohio 0 1 2

Colorado 3 4 5

In [43]: result=data.stack()

In [44]: result

Out[44]:

state number

Ohio one 0

two 1

three 2

Colorado one 3

two 4

three 5

dtype: int32

对于层次化索引的Series,我们可以用unstack将其重排成一个DataFrame:

In [53]: result.unstack()

Out[53]:

number one two three

state

Ohio 0 1 2

Colorado 3 4 5

默认下,unstack操作的是内层,传入分级层级别的编号或名称即可对其他级别进行unstack操作:

In [54]: result.unstack(0)

Out[54]:

state Ohio Colorado

number

one 0 3

two 1 4

three 2 5

In [55]: result.unstack('state')

Out[55]:

state Ohio Colorado

number

one 0 3

two 1 4

three 2 5

如果不是所有的级别值都能在各分组中找到的话,则unstack操作可能会引入缺失数据:

In [56]: s1=Series([0,1,2,3],index=['a','b','c','d'])

In [57]: s2=Series([4,5,6],index=['c','d','e'])

In [58]: s1

Out[58]:

a 0

b 1

c 2

d 3

dtype: int64

In [59]: s2

Out[59]:

c 4

d 5

e 6

dtype: int64

In [60]: data2=pd.concat([s1,s2],keys=['one','two'])

In [61]: data2

Out[61]:

one a 0

b 1

c 2

d 3

two c 4

d 5

e 6

dtype: int64

In [62]: data2.unstack()

Out[62]:

a b c d e

one 0.0 1.0 2.0 3.0 NaN

two NaN NaN 4.0 5.0 6.0

stack默认会滤除数据,因此该运算是可逆的,

In [63]: data2.unstack().stack()

Out[63]:

one a 0.0

b 1.0

c 2.0

d 3.0

two c 4.0

d 5.0

e 6.0

dtype: float64

In [64]: data2.unstack().stack(dropna=False)

Out[64]:

one a 0.0

b 1.0

c 2.0

d 3.0

e NaN

two a NaN

b NaN

c 4.0

d 5.0

e 6.0

dtype: float64

在对DataFrame进行unstack操作时,作为旋转轴的级别将会成为结果中的最低级别:

In [65]: df=DataFrame({'left':result,'right':result+5},columns=pd.Index(['left','right'],name='side'))

In [66]: df

Out[66]:

side left right

state number

Ohio one 0 5

two 1 6

three 2 7

Colorado one 3 8

two 4 9

three 5 10

In [68]: df.unstack('state')

Out[68]:

side left right

state Ohio Colorado Ohio Colorado

number

one 0 3 5 8

two 1 4 6 9

three 2 5 7 10

In [69]: df.unstack('state').stack('side')

Out[69]:

state Ohio Colorado

number side

one left 0 3

right 5 8

two left 1 4

right 6 9

three left 2 5

right 7 10

7.2.2 将“长格式”旋转为“宽格式”

时间序列数据通常时以所谓的“长格式”(long)或“堆叠格式”(stacked)存储在数据库和csv中:

In [6]: ldata = DataFrame({'date':['03-31','03-31','03-31','06-30','06-30','06-30'],

...: 'item':['real','infl','unemp','real','infl','unemp'],'value':['2710.','000.','5.8','2778.','2.34','5.1']})

...: print 'ldata is \n',ldata

...:

ldata is

date item value

0 03-31 real 2710.

1 03-31 infl 000.

2 03-31 unemp 5.8

3 06-30 real 2778.

4 06-30 infl 2.34

5 06-30 unemp 5.1

关系型数据库(MYSQL)中的数据经常都是这样存储的,因为固定的架构(固定的架构指的是列名和数据类型)的优点在于: 随着表中数据的添加或者删除,item列中的值种类能够增加减少。

DataFrame 中的pivot,容易操作长格式的数据。

In [8]: pivoted=ldata.pivot('date','item','value')

In [9]: pivoted.head()

Out[9]:

item infl real unemp

date

03-31 000. 2710. 5.8

06-30 2.34 2778. 5.1

我们给出6个value2的值,此时我们假设有两个参数需要重塑,

In [10]: ldata['value2']=np.random.randn(len(ldata))

In [11]: len(ldata)

Out[11]: 6

In [12]: np.random.randn(len(ldata))

Out[12]:

array([-0.18423106, 1.01403302, 0.37678059, 1.66064609, 0.47334253,

-0.57611932])

In [13]: ldata['value2']

Out[13]:

0 0.396190

1 -0.064478

2 1.783752

3 0.008722

4 -0.316903

5 -0.329522

Name: value2, dtype: float64

In [14]: ldata[:6]

Out[14]:

date item value value2

0 03-31 real 2710. 0.396190

1 03-31 infl 000. -0.064478

2 03-31 unemp 5.8 1.783752

3 06-30 real 2778. 0.008722

4 06-30 infl 2.34 -0.316903

5 06-30 unemp 5.1 -0.329522

忽略最后一个参数,得到的DataFrame就会带有层次化的列:

In [15]: pivoted=ldata.pivot('date','item')

In [16]: pivoted

Out[16]:

value value2

item infl real unemp infl real unemp

date

03-31 000. 2710. 5.8 -0.064478 0.396190 1.783752

06-30 2.34 2778. 5.1 -0.316903 0.008722 -0.329522

In [17]: pivoted[:6]

Out[17]:

value value2

item infl real unemp infl real unemp

date

03-31 000. 2710. 5.8 -0.064478 0.396190 1.783752

06-30 2.34 2778. 5.1 -0.316903 0.008722 -0.329522

In [18]: pivoted['value'][:5]

Out[18]:

item infl real unemp

date

03-31 000. 2710. 5.8

06-30 2.34 2778. 5.1

pivot是一个快捷方式,用set_index创建的层次化索引,再用unstack重塑。

In [19]: unstacked=ldata.set_index(['date','item']).unstack('item')

In [20]: unstacked[:6]

Out[20]:

value value2

item infl real unemp infl real unemp

date

03-31 000. 2710. 5.8 -0.064478 0.396190 1.783752

06-30 2.34 2778. 5.1 -0.316903 0.008722 -0.329522